40 pytorch dataloader without labels

Load custom image datasets into PyTorch DataLoader without ... Iterate DataLoader We have loaded that dataset into the DataLoader and can iterate through the dataset as needed. Each iteration below returns a batch of train_features and train_labels. It containing batch_size=32 features and labels respectively. We specified shuffle=True, after we iterate over all batches the data is shuffled. Loading own train data and labels in dataloader using pytorch? # create a dataset like the one you describe from sklearn.datasets import make_classification x,y = make_classification () # load necessary pytorch packages from torch.utils.data import dataloader, tensordataset from torch import tensor # create dataset from several tensors with matching first dimension # samples will be drawn from the first …

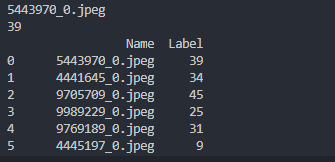

How to use Datasets and DataLoader in PyTorch for custom text data TD = CustomTextDataset (text_labels_df ['Text'], text_labels_df ['Labels']): This initialises the class we made earlier with the 'Text' and 'Labels' data being passed in. This data will become 'self.text' and 'self.labels' within the class. The Dataset is saved under the variable named TD. The Dataset is now initialised and ready to be used!

Pytorch dataloader without labels

Creating a custom Dataset and Dataloader in Pytorch - Medium A dataloader in simple terms is a function that iterates through all our available data and returns it in the form of batches. For example if we have a dataset of 100 images, and we decide to batch... How to load Images without using 'ImageFolder' - PyTorch Forums The DataLoader is not responsible for the data and target creation, but allows you to automatically create batches, use multiprocessing to load the data in the background, use custom samplers, shuffle the dataset etc. The Dataset defines how the data and target samples are created. Issue with DataLoader with lr_finder.range_test #71 - GitHub Because inputs_labels_from_batch() was designed to avoid users modifying their existing code of dataset/data loader. You can just implement your logic inside it. And just note that you have to make sure the returned value of inputs_labels_from_batch() have to be 2 array-like objects, just like the line 41 shows:

Pytorch dataloader without labels. PyTorch Dataloader + Examples - Python Guides Mar 26, 2022 · In this section, we will learn about How PyTorch dataloader can add dimensions in python. The dataloader in PyTorch seems to add some additional dimensions after the batch dimension. Code: In the following code, we will import the torch module from which we can add a dimension. DataLoader returns labels that do not exist in the DataSet - PyTorch Forums Jun 10, 2020 · When I pass this dataset to a DataLoader (with or without a sampler) it returns labels that are outside the label set, for example 112, 105 etc… I am very confused as to how this is happening as I tried to simplify things as much as possible and it still happens. Datasets & DataLoaders — PyTorch Tutorials 1.11.0+cu102 documentation PyTorch provides two data primitives: torch.utils.data.DataLoader and torch.utils.data.Dataset that allow you to use pre-loaded datasets as well as your own data. Dataset stores the samples and their corresponding labels, and DataLoader wraps an iterable around the Dataset to enable easy access to the samples. Multilabel Classification With PyTorch In 5 Minutes - Medium Our custom dataset and the dataloader work as intended. We get one dictionary per batch with the images and 3 target labels. With this we have the prerequisites for our multilabel classifier. Custom Multilabel Classifier (by the author) First, we load a pretrained ResNet34 and display the last 3 children elements.

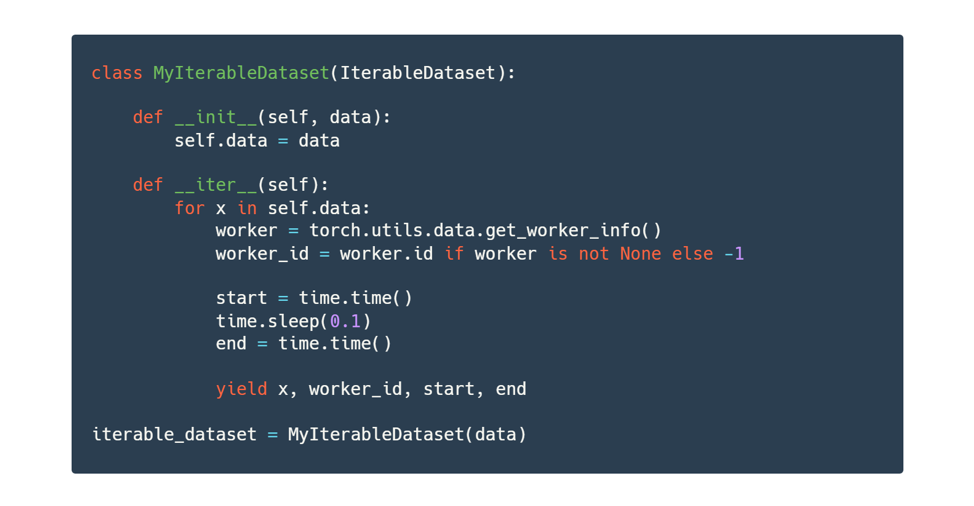

Load Pandas Dataframe using Dataset and DataLoader in PyTorch. Then, the file output is separated into features and labels accordingly. Finally, we convert our dataset into torch tensors. Create DataLoader. To train a deep learning model, we need to create a DataLoader from the dataset. DataLoaders offer multi-worker, multi-processing capabilities without requiring us to right codes for that. Developing Custom PyTorch Dataloaders Now that you've learned how to create a custom dataloader with PyTorch, we recommend diving deeper into the docs and customizing your workflow even further. You can learn more in the torch.utils.data docs here. Total running time of the script: ( 0 minutes 0.000 seconds) A detailed example of data loaders with PyTorch pytorch data loader large dataset parallel ... A good way to keep track of samples and their labels is to adopt the following framework:. Data loader without labels? - PyTorch Forums Jan 19, 2020 · Yes, DataLoader doesn’t have any conditions on the number of outputs of your Dataset as seen here: class MyDataset (Dataset): def __init__ (self): self.data = torch.randn (100, 1) def __getitem__ (self, index): x = self.data [index] return x def __len__ (self): return len (self.data) dataset = MyDataset () loader = DataLoader ( dataset, batch ...

Creating a dataloader without target values - PyTorch Forums I am trying to create a dataloader that will return batches of input data that doesn't have target data. Here's what I am doing: torch_input = torch.from_numpy (x_train) torch_target = torch.from_numpy (y_train) ds_x = torch.utils.data.TensorDataset (torch_input) ds_y = torch.utils.data.TensorDataset (torch_target) train_loader = torch ... Manipulating Pytorch Datasets - Medium The class ConcatDataset takes in a list of multiple datasets and returns a concatenation of these ones. In this way, we obtain a dataset of 7000 samples and a dataloader with 274 batches. 3 ... Writing ResNet from Scratch in PyTorch ResNet. Now, that we have created the ResidualBlock, we can build our ResNet. Note that there are three blocks in the architecture, containing 3, 3, 6, and 3 layers respectively. To make this block, we create a helper function _make_layer. The function adds the layers one by one along with the Residual Block. Loading Image using PyTorch - Medium 3. Data Loaders. After loaded ImageFolder, we have to pass it to DataLoader.It takes a data set and returns batches of images and corresponding labels. Here we can set batch_size and shuffle (True ...

Custom Dataset and Dataloader in PyTorch - DebuggerCafe As usual, we import the required libraries in lines 8 and 10. From line 12 we start our custom ExampleDataset () class. Also, note that we inherit the PyTorch Dataset class which is really important. As inheriting the class will allow us to use all the cool features of Dataset class.

Problem with Dataloader and labels · Issue #22566 - GitHub 6 Jul 2019 — ... with the help of the dataloader API, single predictions were often wrong, so I iterated through my images without the dataloade...

Pytorch dataset(sampler) - better-tomorrow.tistory.com 6. 21. 22:05. 코드를 보다가 pytorch에서 sampler를 사용하길래 간단히 아래와 같이 코드를 짜서 output을 확인해서 어떤식으로 동작하는지 찾아보았다. import random import numpy as np import torch from torch.utils.data import Dataset, RandomSampler, BatchSampler random_seed = 8138 torch.manual_seed ...

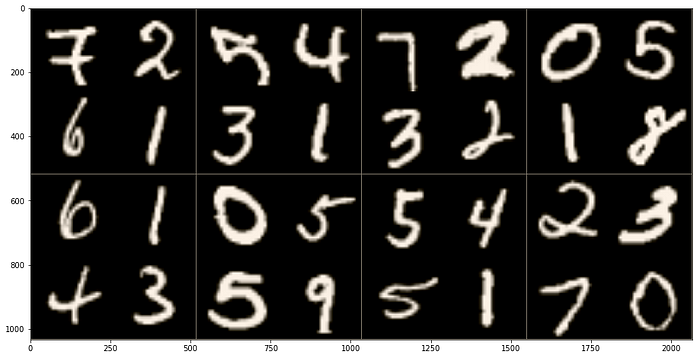

Create a pyTorch testing Dataset (without labels) - Stack ... Feb 24, 2021 · This works well for my training data, but I get an error ( KeyError: " ['label'] not found in axis") when loading the testing csv file, which is identical other than there being no "label" column. If it helps, the intended input csv file is MNIST data in csv file which has 28*28 feature columns.

Image Data Loaders in PyTorch - PyImageSearch A DataLoader accepts a PyTorch dataset and outputs an iterable which enables easy access to data samples from the dataset. On Lines 68-70, we pass our training and validation datasets to the DataLoader class. A PyTorch DataLoader accepts a batch_size so that it can divide the dataset into chunks of samples.

Loading data in PyTorch — PyTorch Tutorials 1.11.0+cu102 documentation Loading the data. Now that we have access to the dataset, we must pass it through torch.utils.data.DataLoader. The DataLoader combines the dataset and a sampler, returning an iterable over the dataset. data_loader = torch.utils.data.DataLoader(yesno_data, batch_size=1, shuffle=True) Copy to clipboard. 4.

Unsupervised Data set reading - vision - PyTorch Forums In particular, the __getitiem__ method, which returns a tuple comprising (data, label) The generic loop is something like: for (data, labels) in dataloader: # train / eval code You're free to ignore the label here and you can train an autoencoder on cifar10, for example, pretty much out of the box.

How to use Datasets and DataLoader in PyTorch for custom text data | by Jake Wherlock | Towards ...

Beginner's Guide to Loading Image Data with PyTorch Create a DataLoader The following steps are pretty standard: first we create a transformed_dataset using the vaporwaveDataset class, then we pass the dataset to the DataLoader function, along with a few other parameters (you can copy paste these) to get the train_dl. batch_size = 64 transformed_dataset = vaporwaveDataset (ims=X_train)

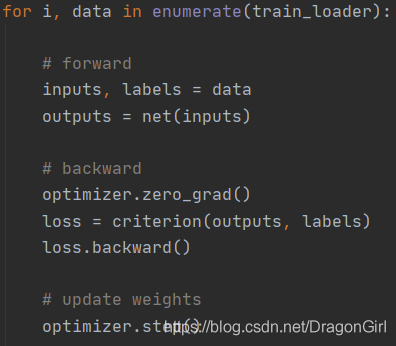

Training with PyTorch — PyTorch Tutorials 1.11.0+cu102 documentation It enumerates data from the DataLoader, and on each pass of the loop does the following: Gets a batch of training data from the DataLoader; Zeros the optimizer's gradients; Performs an inference - that is, gets predictions from the model for an input batch; Calculates the loss for that set of predictions vs. the labels on the dataset

Post a Comment for "40 pytorch dataloader without labels"